Notes in the Margin - 30 August 2024

Amistics, Enchantment, Reassurance for Clinicians, and More

Notes from a Family Meeting is a newsletter where I hope to join the curious conversations that hang about the intersections of health and the human condition. Poems and medical journals alike will join us in our explorations. If you want to come along with me, subscribe and every new edition of the newsletter goes directly to your inbox.

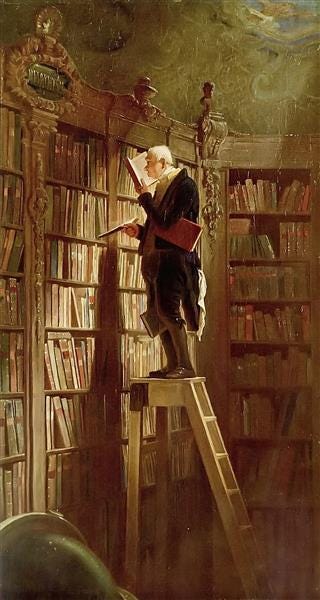

Every so often, I’ll share things I’ve been reading with a few words of mine scribbled in the margins. If you have something to share, please do! The comment section is open.

Artificial intelligence, like all technological innovations, poses challenges. It’s not all benefit. Brian Boyd argues that we could take a page out of the playbook of the Amish to determine which technologies will help us achieve meaningful goals and which won’t. “Our tech debates do not begin by deliberating about what kind of future we want and then reasoning about which paths lead to where we want to go. Instead they go backward: we let technology drive where it may, and then after the fact we develop an “ethics of” this or that, as if the technology is the main event and how we want to live is the sideshow. When we do wander to the sideshow, we hear principles like “bias,” “misinformation,” “mental health,” “privacy,” “innovation,” “justice,” “equity,” and “global competitiveness” used as if we all share an understanding of why we’re focused on them and what they even mean.”

If Your World is Not Enchanted, You’re Not Paying Attention

suggests that enchantment might be a measure of our attention. There are things about the world that don’t give themselves up on first being seen, but require our patient, even grateful and loving, attention to recognize.The Absence of Reassuring Counterfactuals in Medicine

shares an inevitable reality of clinical decision-making: we can’t know how things would have otherwise turned out. One thing that assuages my concerns about this is when my recommendations are linked with the patient's goals and values. Of course very few people are sitting around on a Saturday night pondering their goals of care for fun. Discerning the goals of care is often an ad hoc process of helping someone realize what kinds of trade-offs they'll need to make down different paths of medical intervention. In this way, the clinician becomes a "choice architect."Nevertheless, if I know someone's goals and values, we can take what we know about their disease and medical technology and create a plan that can pursue those things, albeit with eyes wide open about what the trade-offs will be. Yes, maybe if we did something different, we would have achieved a "better" outcome, but I can't twist their arm to choose otherwise.

Technology Makes You More than Alive or More Than Dead

laments something I see every day in my clinical work: technology designed to bring us into greater health actually alienates us from it: “What machines do, ideally, is give us time to focus on each other. We use machines to do the opposite — to distract and alienate ourselves from the very people we created the machines to protect and enjoy. … Death reminds us of what matters and hopefully reminds us to aim our machines at what matters.” Remarkably, this distraction happens all the time, as I frequently revisit (e.g., here and here). We do need to be careful about seeking some kind of transcendence through technology and, on the way, degrading those lives that don’t measure up.From the Archives

Here's something, only a little dusty, that new readers may not have seen.

“Can we recognize, and help others to recognize, that people themselves do not become burdens just because they’re sick? They can, though, share the burdens with those closest to them. Sometimes those shared burdens burn out caregivers. That still doesn’t mean the person has become the burden. It means that the burden of their illness has become too great even for multiple people to bear.”

Can People Become Burdens?

The “right to die” seems a strange child of the bloodiest century in history.

Hi Dr. did you see the NYT article about Acadia trapping patients? Thoughts on it

I think a serious problem for all physicians is when a patient "knows" something medically that just isn't so.